Quickstart¶

ResLib can be used solely to provide a flexible dependency checking engine. This usecase is covered in the Pipelines section.

Additionally, ResLib has functionality to aid a project which uses Python in some or all of its data-cleaning or analysis, covered in Python Library.

Pipelines¶

Dependency tracking of pipelines in ResLib is inspired by Doit. The DependencyScanner allows for parsing and extracting dependencies from source code through the use of specific comment tags.

There are three such comments tags:

INPUTfor when reading in a datasetINPUT_FILEfor when running another file inline, like a load-function in python or running a do file in StataOUTPUTfor defining which datasets the current file creates.

The DependencyScanner matches inputs to outputs to creat the DAG.

The idea is that if you add INPUT/OUTPUT comments to your existing code,

crating the DAG graph should be trivially easy, as the simple example below shows.

As an example of how to integrate the depenedncy scanning into your project, assume the following three files exist in the ~/projects/example folder:

/* INPUT_DATASET funda.sas7bdat */

PROC EXPORT DATA=funda OUTFILE= "data/stata_data.dta"; RUN;

/* OUTPUT: stata_data.dta */

/* INPUT_DATASET stata_data.dta */

use "data/stata_data.dta", clear

/* INPUT_FILE: load_data.do */

do "code/load_data.do"

Then the following would create a graph output at pipeline.png:

from reslib.automate import DependencyScanner, SAS, Stata

# Just scan for SAS and Stata code, located in the code directory.

ds = DependencyScanner(project_root='~/projects/example/',

code_path_prefix='code', data_path_prefix='data')

print(ds)

ds.DAG_to_file("pipeline.png")

will print the following:

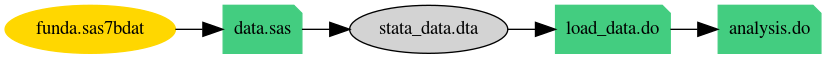

And create pipeline.png with the DAG graphed:

The colors in the image are intended to be informative at a glance. Yellow indicates that a dependency doesn’t have a parent (only applies to datasets). Green indicates the files which were scanned, and grey indicates the datasets. Lastly, if the pipeline isn’t a DAG because it is cyclic (File A creates a dataset for File B, which creates a dataset used in File A), the background will turn red, for ERROR!!!

Individual files can be omitted from the scan by adding the comment

RESLIB_IGNORE: True (will take true, yes, or 1, all case insensitive).

The DependencyScanner has many settings, the salient ones being:

project_root: Path to ‘root’ directory, from which relative paths to input/output file dependencies will be calculated. (Default ='.')code_path_prefix: Path to ‘code’ directory, which is relative to theproject_root. To make the full path to the code file, the prefix will be added to project root, then to the path defined in the INPUT/OUTPUT: comment. (Default =None)data_path_prefix: Path to ‘data’ directory, which is relative to theproject_root. To make the full path to the data file, the prefix will be added to project root, then to the path defined in the INPUT/OUTPUT: comment. (Default =None)